ICML-2008 Tutorial: Saturday, July 5, 2008

Graphical models and variational methods: Message-passing and

relaxations

Tutorial presenter: Martin Wainwright,

Assistant Professor,

Goals and summary

Graphical models provide a flexible framework for capturing

dependencies among large collections of random variables, and are by

now an essential component of the statistical machine learning

toolbox. Any application of graphical models involves a core set of

computational challenges, centered around the problems of

marginalization, mode-finding, parameter estimation, and structure

estimation. Although efficiently solvable for graphs without cycles

(trees) and graphs of low treewidth more generally, exact solutions to

these core problems are computationally challenging for general

graphical models with large numbers of nodes and/or state space sizes.

Consequently, many applications of graphical models require efficient

methods for computing approximate solutions to these core problems.

The past decade and a half has witnessed an explosion of activity on

approximate algorithms for graphical models.

The goal of this tutorial is to provide a unifying roadmap for

navigating and understanding the broad array of approximate algorithms

for marginalization and learning in graphical models. This tutorial

will show how a wide class of methods----including mean field theory,

sum-product or belief propagation algorithms, expectation-propagation,

and max-product algorithms----are all variational methods, meaning

that they can be understood as algorithms for solving particular

optimization problems on graphs. The perspective also forges

connections to convex optimization, including linear programming and

other type of conic relaxations.

Presenter's background

Martin J. Wainwright is an Assistant Professor at UC Berkeley,

with a joint appointment between the Department of Electrical

Engineering and Computer Sciences, and the Department of Statistics.

His research interests include statistical machine learning,

information theory, statistical signal processing, and

high-dimensional statistics. He received a PhD in EECS from the

Massachusetts Institute of Technology, with a thesis focusing on

graphical models and variational methods, for which he was awarded the

George M. Sprowls Award for outstanding doctoral dissertation. He has

received several awards, including a Sloan Fellowship, an Okawa

Research Fellowship, an NSF CAREER Award, and various conference paper

awards. He has worked extensively on graphical models,

message-passing and variational methods, given various invited talks

and tutorials on the topic, and taught a graduate level course in the

area at UC Berkeley.

Martin Wainwright's webpage

Outline

The tutorial will begin with a quick introduction to graphical models,

before moving onto exponential families and variational formulations.

Various classes of approximate inference algorithms will be derived

from the variational perspective, including belief propagation,

expectation-propagation, mean field, and various convex relaxations.

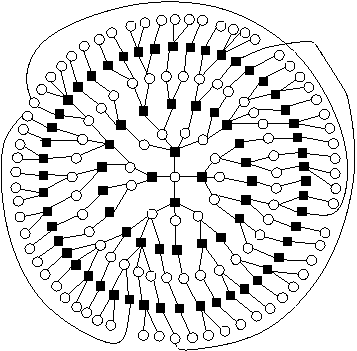

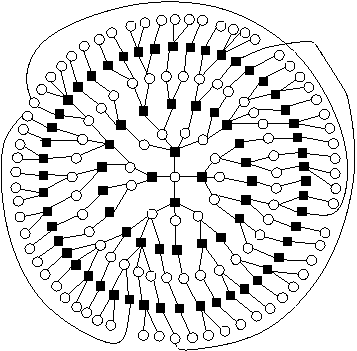

Basics of graphical models

- Directed models, undirected models, factor graphs

- Marginalization, MAP problem, parameter/structure learnin

- Exponential families and maximum entropy

- Mean parameters and global validity

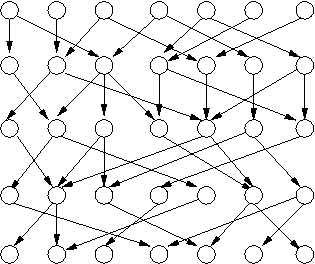

Bethe-Kikuchi, sum-product, and expectation-propagation

- Bethe approximation and belief propagation

- Kikuchi clustering and generalization

- Expectation-propagation

Mean field methods and learning

- Naive and structured mean field

- Variational EM and Bayes

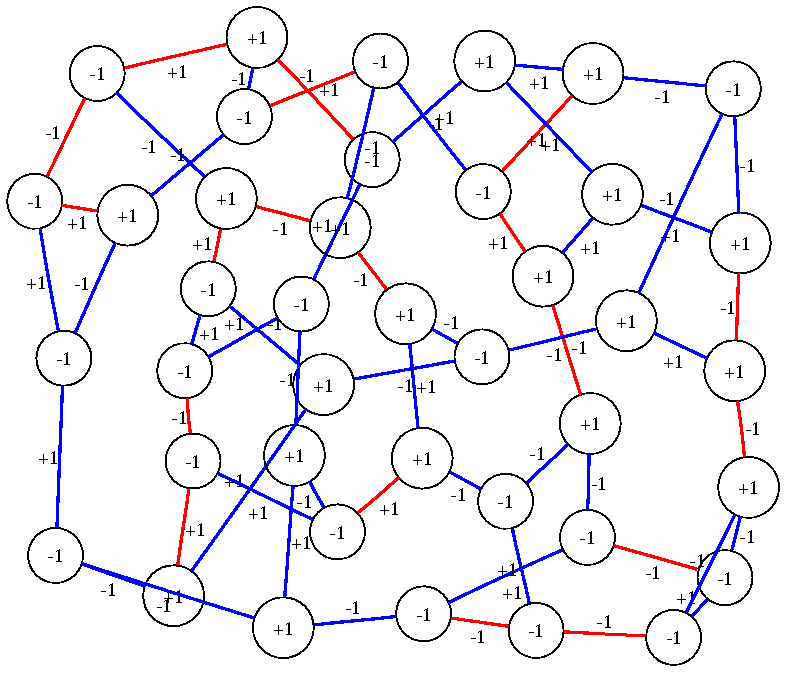

LP relaxations, max-product and other convex relaxations

- Basic tree-based LP relaxation

- Reweighted max-product and LP duality

- Higher-order LP relaxations

- Conic programming relaxations (SOCP, SDP etc.)

Tutorial slides

Slides (PDF)

Slides (2 per page) (PDF)

Intended audience

The literature on message-passing and variational methods can be

difficult to navigate at times, since it draws on a large number

of different areas (optimization, statistics, AI, physics etc.). The goal

of this tutorial is provide a guide to this area, so that researchers

in machine learning can better understand and implement existing

methods, and also tailor variational methods to particular applications

of interest.

We assume a familiarity with the basic concepts of graphical

models (graphs, probability distributions, conditioning, independence,

Markov properties). Those in need of a refresher can consult the

lecture notes and videos available on-line (see Background materials below).

Background and auxiliary materials

Machine Learning Summer School, Taipei, August 2006

Lectures on Graphical Models and Variational Methods.

- Introductory lecture:

Part I

- Advanced topics:

Part II

Tutorial papers and book chapters

Graphical models and variational methods (Wainwright and Jordan, 2005):

Book chapter

Graphical models and variational methods (Wainwright and Jordan, 2003):

The "Monster" technical report