Sen-ching Cheung and Avideh Zakhor (October, 2001)

The amount of information on the world wide web has grown enormously since its creation in 1990. By February 2000, the web had over one billion uniquely indexed pages and 30 million multimedia documents, including audio, video and images. Since there is no central management on the web, duplication of content is inevitable. A study done in 1998 estimated that about 46% of all the text documents on the web have at least one "near-duplicate'' - document which is identical except for low level details such as formatting[1]. The problem is likely to be more severe for web video as they are often stored in multiple locations, different formats and file-sizes to facilitate downloading and streaming. Similar versions of the same video can also be found, unaware of by content creators, when web users modify and republish original contents using video editing tools. Identifying these similar contents is beneficial to many web video applications:

We consider two video sequences to be similar if they share a significant portion of visually similar frames. Our goal is to develop algorithms for video similarity measurement and search, which can be efficiently deployed in very large databases such as the web. In most of the commercial search engines, descriptive text and hyperlink addresses are extracted as meta-data for video retrieval. We find that such meta-data information is inadequate for finding similar video[3]. As a result, our research primarily focuses on similarity detection algorithms in visual domain.

In general, computing similarity between two video sequences requires identifying the closest match for every single frame in each video. Such a process is impractical for large databases - it requires storage of the entire video and computational complexity proportional to the product of the lengths of the two video. In our research, we develop a novel randomized algorithm for measuring video similarity, called the Video Signature Method, that scales well for large databases[2]. The basic idea is to form a small summary or signature for each video, which consists of a small number of its frames that are most similar to a set of random seed images. We show that, by comparing signatures alone, we can provide a good estimate of the underlying video similarity. We subsequently improve the algorithm by optimally selecting the most robust portion of a signature for comparison. Based on a ground-truth set composed of diverse web video, a simple signature-distance thresholding scheme can achieve 80% recall and 85% precision with nine signature frames per video.

As video signatures consists of high-dimensional feature vectors, similarity search on a large signature database is a time-consuming process. A typical strategy to speed up the search is to approximate high-dimensional signatures with low-dimensional index vectors. Distance between low-dimensional index vectors can be computed very efficiently with various database access methods. The key step is to develop efficient dimension reduction algorithms such that distances in signature space can be well estimated by using the corresponding index vectors. In our work, we investigate non-linear dimension reduction techniques for video signatures. We have shown that by applying our dimension reduction algorithm, 99% of signature comparisons can be eliminated with less than 10% loss in recall performance[3]. The proposed algorithm is based on the "triangle-inequality based pruning'' methods in which a low dimensional index vector is composed of distance values between the corresponding signature and a set of "key'' vectors. We improve upon the original scheme by devising an algorithm to optimally selecting key vectors in order to minimize the estimated distortion. Under perfect recall, our technique outperforms the popular FASTMAP indexing in complexity reduction when the dimension of index vectors is no bigger than nine[5].

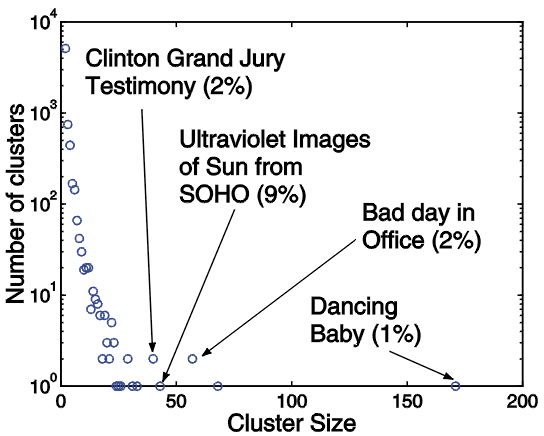

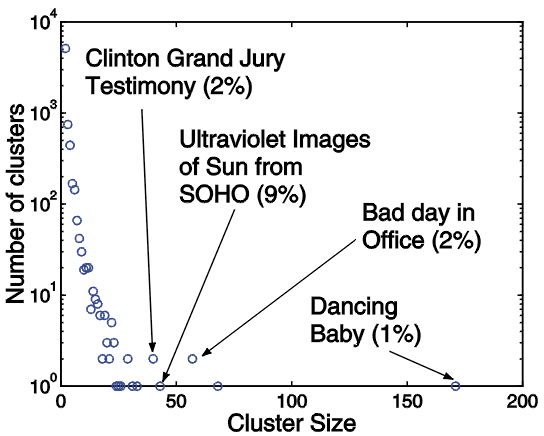

Due to diversity among web video, applying a single constant threshold to signature distances may not be able to identify all similar video from a large database. Retrieval performance can be improved by considering all distance relationships among individual video sequences in the database. In order to exploit such relationships, we develop a novel clustering algorithm to identify compact clusters in the database. This algorithm is able to adapt to local statistics and robust against sampling error in video signatures. Similar clusters are identified as "almost-complete'' connected components from the proximity graph of all video signatures at many distance thresholds[4]. Using our clustering technique, the retrieval performance on ground-truth data improves to 85% recall and 95% precision. Since we are also interested in understanding how similar video are distributed in the web, we apply our clustering algorithm to a large number of video crawled from the web. Between September and December in 1999, we have gathered approximately 46,500 video clips of various formats from the web. Our clustering algorithm produces a total of 6,900 clusters from this dataset and the average cluster size is 2.81. The distribution of cluster size is shown in figure 1. It is highly skewed with more than half of the video not having a single similar version. Nonetheless, as popular contents are likely to have multiple versions, large similar clusters should be of higher importance to users. We label some of the largest clusters in figure 1. Grouping video into similar clusters can also provide better visual organization of search results. We have built a prototype search engine that demonstrates how similar clusters can be used in typical web video search. This search engine can be accessed through our web-site at http://www.eecs.berkeley.edu/~cheungsc/cluster/search.html.